Why Benchmark Your Code?

Firstly, it’s invaluable for optimizing algorithms and code snippets, identifying performance bottlenecks, and validating enhancements.

Secondly, it helps in version comparisons, ensuring that new releases maintain or improve performance.

Moreover, it contributes to hardware and platform compatibility testing, highlighting discrepancies in different environments.

Lastly, it helps us make informed technology choices by comparing the performance of various frameworks or libraries.

Big O Notation

It offers a simplified view of an algorithm’s performance as the input grows larger.

It’s important to understand the basics to better appreciate benchmarking.

You can find a lot of content online explaining Big O Notation but here are a couple I found valuable.

Introduction to Big O Notation and Time Complexity

Here we have 3 algorithms to sort a list (with links to explanations of how the algorithm works)

Test Example Code (Basic Benchmark Ex)

Let’s test our code using the pytest-benchmark plugin

@pytest.mark.sort_small

def test_bubble_sort_small(sort_input1, benchmark):

result = benchmark(bubble_sort, sort_input1)

assert result == sorted(sort_input1)@pytest.mark.sort_large

You can (and should) test this for a variety of inputs, however for the simplicity of this example we’ve just used 2, a small and larger list.

tests/conftest.py

@pytest.fixture

def sort_input1():

return [5, 1, 4, 2, 8, 9, 3, 7, 6, 0]

We pass the input fixture sort_input1 as an argument along with the benchmark argument.

We then call the benchmark class and pass 2 arguments — our function to be benchmarked (bubble_sort() in this case) and the input list to our function.

Note the use of custom markers like @pytest.mark.sort_small and @pytest.mark.sort_largeto group tests making it easy to run just those tests.

Running the test using

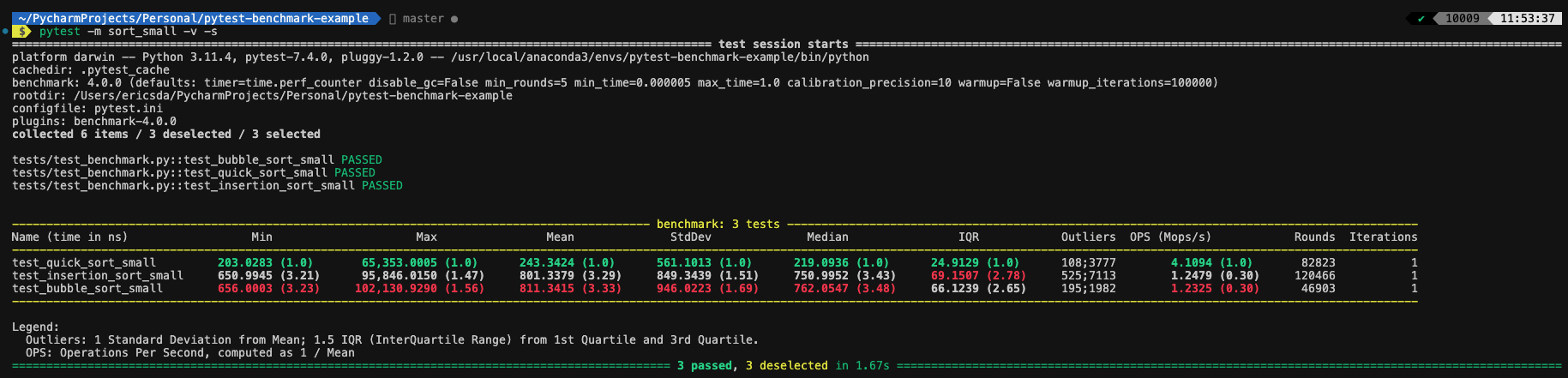

pytest -m sort_small -v -s

Analyzing Benchmark Results

Now let’s analyze the above result.

-

Min Time: The shortest time among all iterations. This shows the best-case scenario for your code’s performance.

-

Max Time: The longest time among all iterations. This shows the worst-case scenario for your code’s performance.

-

Mean Time: The average time taken for all iterations of the benchmarked code to execute. This is often the most important metric as it gives you an idea of the typical performance of your code

-

Std Deviation: The standard deviation of the execution times. It indicates how consistent the performance of your code is. Lower values suggest more consistent performance.

-

Median Time: The middle value in the sorted list of execution times. This can be useful to understand the central tendency of your code’s performance and reduce the influence of outliers.

-

IQR (Interquartile Range): A statistical measure that represents the range between the first quartile (25th percentile) and the third quartile (75th percentile) of a dataset. It’s used to measure the spread or dispersion of data points. In the context of benchmarking, the IQR can provide information about the variability of execution times across different benchmarking runs. A larger IQR could indicate more variability in performance, while a smaller IQR suggests more consistent performance.

-

Outliers: Data points that significantly deviate from the rest of the data in a dataset. These data points are often located far away from the central tendency of the data, which includes the mean (average) or median (middle value).

-

OPS (Operations Per Second) or Mops/s (Mega-Operations Per Second): Metrics used to quantify the rate at which a certain operation is performed by the code. It represents the number of operations completed in one second. The “Mega” in Mops/s refers to a million operations per second. These metrics are often used to assess the throughput or efficiency of a piece of code. Higher the better.

-

Rounds: A benchmarking process involves multiple rounds, where each round consists of running the code multiple times to account for variations in performance caused by external factors.

-

Iterations: Within each round, there are iterations. Iterations involve executing the code consecutively multiple times to capture variability and minimize the impact of noise.

Pytest benchmark analyze these results and order them in order of best (green) to worst (red).

If you’re more the visual type and enjoy graphs, Pytest benchmark also has the option to generate Histograms.

pytest —benchmark-histogram -m sort_large

We use the benchmark.timer() method to measure the time for each iteration.

After the loop, we calculate the average time per iteration.

Pytest Benchmark — Pedantic mode

For you advanced users out there, Pytest benchmark also supports Pedantic mode i.e. higher level of customisation.

Most people won’t require it but if you feel the itch to tinker you can customise it like this

benchmark.pedantic(

target,

args=(),

kwargs=None,

setup=None,

rounds=1,

warmup_rounds=0,

iterations=1

)

Running the below command will automatically save the results to the .benchmarks folder.

pytest -m sort_large --benchmark-autosave

Compare

Comparing past executions can nicely be done in the terminal using the command

pytest-benchmark compare 0001 0002