02 - Packet’s load tolerance

Keep up packets

- bandwidth: measures capacity (a bigger pipe would mean higher bandwidth)

- latency: measures data speed (how quickly does the water in the pipe reaches its destination)

- throughput: measures data transmitted and received during a specific time (the water running through the time)

Example: you can have a 100Gb/s NIC (bandwidth), but can transmit only at 40Gb/s (throughput) because of overheads

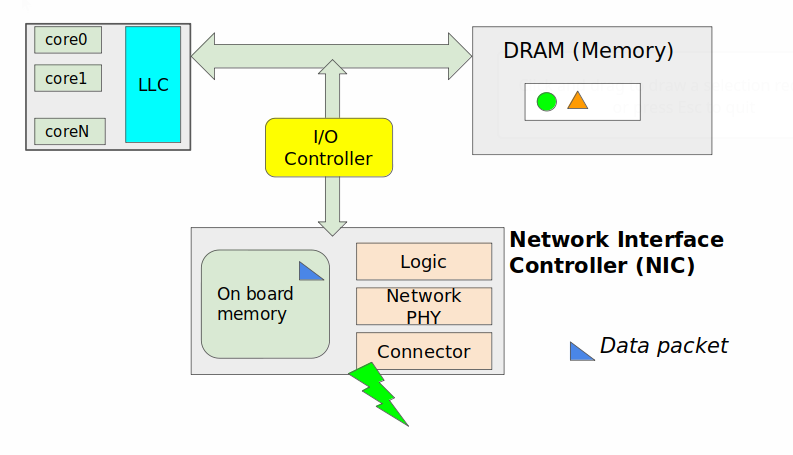

Recap: model

| Level | OSI | TCP/IP | Examples |

|---|---|---|---|

| 1 | physical | same | ethernet |

| 2 | data link | same | ethernet |

| 3 | network | same | ip |

| 4 | transport | same | tcp/udp |

| 5 | session | - | |

| 6 | presentation | - | |

| 7 | application | same |

Socket

- first in 1983

- level of abstraction very useful because nowadays are still used

- unix file with basic function that can send and receive “data”

- it is between transport and application layer

The other things are managed by the OS!

Questions

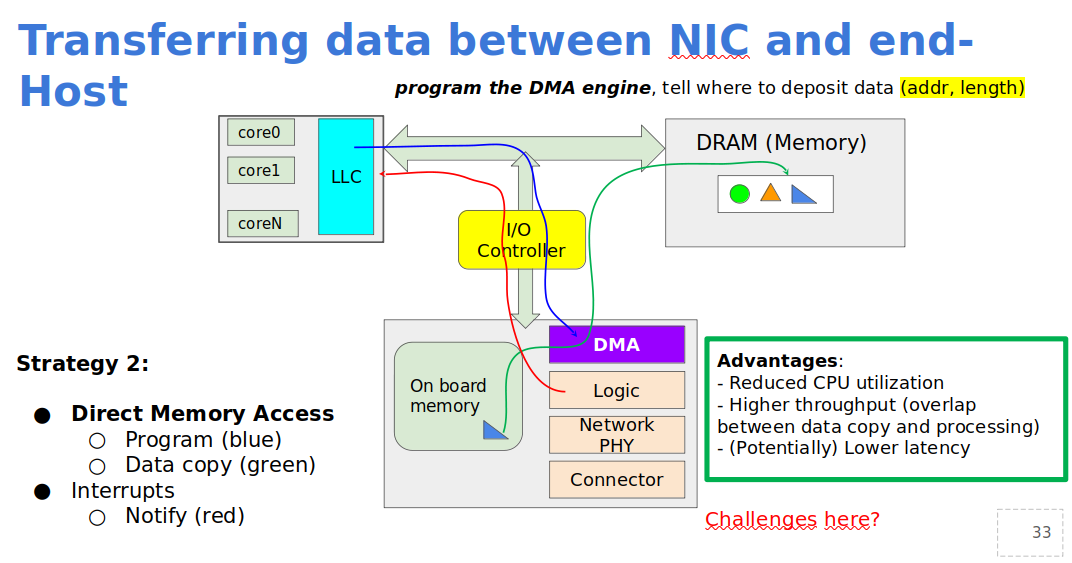

- transfer data between network controller and the end host

- Notify the end host about network packet reception

-

we can use interrupts

- letteralmente il SO che dice “ao, *ferma tutto* … che te serve? … Ok, mo vedi d’annattenne” e si rimette in ascolto

-

if CPU gets an interrupt and keeps to be interrupted, this leads to a livelock: a state where the system is perfectly able to work but it cannot do any work

-

deadlock: process waits for some resource to do the work

-

Interrupt would occur each 6ns. It is not sustainable for the cpu to work in this way, so, some possible alternatives:

- interrupt coalescing (mix): generate an interrupt every n packets

- polling: disable interrupts all together, use CPU polling to check for new arrivals

- hybrid: a mix of these two

- interrupts → polling → interrupts

- there is a threshold when the rate exceed then switch to polling, then to interrupts

Advantages

- lower cpu utilization

- higher throughput

Disadvantages

- higher latency

- build a packet with multiple protocols and headers